So in a previous post I mentioned my team's latest pull request where we were adding a tool that can transpose data.

Because there seemed to be some confusion about its uses, I am going to elaborate upon them here for a moment.

Users are never forced to transpose their data. This feature was requested to be added to Galaxy. A reason that a user may want to transpose their data is for use with Galaxy's column filtering tool for performing statistical analysis or merely just grouping data by data values rather than the features that exist within data. Additionally, the transposition of the data with the tool allows for the usage of tabular data that is not square, though the examples we gave were of square data.

So let's get to the update about the actual pull request itself. John Chilton, the same developer who responded last time, responded to my pull request:

Flipping over to the activity section he left a comment whenever declining the pull request. He said "Would love to see this in the tool shed!" So that recapitulation of his main comment there has Team Rocket now looking at and experimenting with adding to the tool shed.

So why didn't we add our tool directly into the tool shed before? It would make sense to go straight there, right? Well, I made the decision to submit our pull request the same way as last time because the tool we were developing went hand-in-hand with other tools that are located within the core section of Galaxy (even located in the same toolset as other tools in the core). As you can read, the tools being developed by the core team are now even being moved to the tool shed. So this is no issue.

There is an issue, though, and it was something I had worried about whenever first submitting the pull request. The way we are transposing data has the entirety of the file read into memory at one time. For Galaxy, this just cannot happen. This is because Galaxy users are typically dealing with genomic data that can be upwards of 50 GB per file at times. Reading all of that into memory at one time really is not feasible, even with the nicest of server stacks. So we are going to have to brainstorm a methodology for cutting the data up into chunks and slowly write the data. I imagine the code will become less readable, but will be far more efficient when working with big data. I look forward to tinkering and trying to get this to work over the next few weeks. We have a break coming up for classes so I am unsure if I will be able to keep up my regular posting, but I will definitely try if I have the time.

I'd like to close with my initial idea of how to update the tool to reflect the needs for big data usage:

$ outLineNum = 0

$ with open(inputFile) as infile:

$ for line in infile:

$ items = line.split('\t')

$ for item in items:

$ outputFile.write( # Think about most efficient way

$ outLineNum += 1

$ outLineNum = 0

So only one line will be read into memory at a time and previous lines will be garbage collected. Now this may still pose issues as some datasets have thousands, or perhaps more, features which would result into a lot of data still being read into memory. Perhaps I could take a different route and just slowly read in individual datum and then put that into the output file as needed.

One last mention I would like to make. The first pull request made to Galaxy is now ready to be versioned into Galaxy and is located with the Galaxy Central branch in the next update. It was all of this before, but now it's "official" with this Trello Card

Music listened to while blogging: Kendrick Lamar

A blog dedicated to my experiences and development as a Data Science and Computer Science researcher.

Thursday, February 27, 2014

Wednesday, February 26, 2014

Capstone: Galaxy and RStudio

So this post is going to be focusing on the integration of Galaxy and RStudio into Learn2Mine. Last post focused on the virtual portfolio which is just 1 of 3 core parts of Learn2Mine

Galaxy is an open source project in which I have described in great detail in past non-capstone related posts, so I'll just do a quick summary here. Galaxy is primarily a bioinformatics analysis tool that specializes in working with genomics data. It abstracts the command line from users with a javascript interface and gives users some python/perl/etc files to work with when converting data, running programs like tophat or tuxedo, and even some statistical analyses. Galaxy allows users to create workflows by tying jobs together - think of it as a set of directions. First, I want to upload these genetic datasets and then run them together in this one tool which aligns them with this specific algorithm and then I want to send that result to a visualization tool which creates an HTML output that tells me the score of the alignment and gives me the option to download the alignment file. If this were a workflow then I could provide the workflow with my files and it would do everything else by itself through scheduling within the job manager. All that really matters here for Learn2Mine is that you can use the output of jobs within Galaxy as inputs for other jobs. So I can upload datasets and use them in Learn2Mine tools. I can use the output of code I run or tools as my output when submitting for grading. I can perform scaling or filtering on my data and then use the new scaled/filtered version with a tool. This stream-of-consciousness description of Galaxy is probably the most watered down version I've given, but my past blog posts talk about Galaxy and, if you really want to read more, then those are there.

So how does Learn2Mine take advantage of Galaxy? The tools that I mentioned in my last post that we have built use Galaxy's interface to allow less-experienced programmers conduct algorithms without having to know all the specifics. On the right you will see the result of an XML markup of Learn2Mine's neural network tool. The very first input (at the top) allows users to input a dataset they have previously uploaded to Galaxy as the dataset to use for the algorithm. It is worth noting that even data that has been altered past the upload data portion of Galaxy can also be used here. The rest of the inputs do not rely on past jobs in Galaxy, but, rather, is an abstraction of inputs that you would normally feed into a neural network. For example, the hidden layers input. The hidden layers input takes a comma separated list of values. For each item separated by commas, there is a hidden layer. The number that is listed represents how many nodes exist for that respective layer. Concepts like this perpetuate throughout all of the built-in tools for Learn2Mine.

Alternatively, there is a section of Galaxy tools that we have built referred to as "Learning R" tools. The only jobs that can be run from those tools are "Create RStudio Account", "Get Personalized Dataset", and "Submit R Lessons to Learn2Mine". The "Create RStudio Account" tool is one that was made recently. This tool was completely masked earlier because in order to communicate from Galaxy to Learn2Mine we were forcing users to pass a unique key, that was associated with their account, around Galaxy. When users submitted their key to Galaxy in the past, we created their RStudio account behind the scenes. Until we find a way to automate the creation of an RStudio account with a Learn2Mine signup, we will have to make users use this tool if they want to use our cloud-based R IDE. The "Submit R Lessons to Learn2Mine" tool is a tool that you can run whenever you want to submit an R-based lesson to Learn2Mine for grading/badge-earning (this tool is analogous to the Submit Learn2Mine Tool Lesson tool in the Learn2Mine_Toolset section). This Submit R Lessons tool allows users to submit code/answers in Galaxy output or copy/paste their answer into a text-box - this is done because some users prefer one way and some prefer the other and it was not difficult to allow either. The "Get Personalized Dataset" tool is a tool that we hope to use more in the future. Right now it is only used for the advanced R lessons. It takes a user's information and gives them a personalized dataset for use in lessons - so no 2 users will have the same dataset on which to perform analysis and be graded on. We would like this to become the standard for all lessons.

As I mentioned in the previous paragraph, RStudio is a section of Learn2Mine in which users have to have Galaxy create their account. This is because, currently, our RStudio server is using accounts located on our Learn2Mine server in order to authenticate - so there is no current way to tie in Google authentication into that form of login. RStudio is a cloud-based IDE which allows users to go and run R code through an interpreter, or run entire files - much like R IDE's that require local installation. RStudio allows users to install any 3rd party R packages that they desire. This is especially useful for visualization tasks. Typically, we want users to come to RStudio to write their code and then submit their code/answer on Galaxy. It would be wonderful if we could somehow tie RStudio and Galaxy even further by just pointing Galaxy to a file that a user is working on for a lesson, but that is beyond the scope of this Spring semester.

Music listened to while blogging: Kanye West and Lily Allen

Galaxy is an open source project in which I have described in great detail in past non-capstone related posts, so I'll just do a quick summary here. Galaxy is primarily a bioinformatics analysis tool that specializes in working with genomics data. It abstracts the command line from users with a javascript interface and gives users some python/perl/etc files to work with when converting data, running programs like tophat or tuxedo, and even some statistical analyses. Galaxy allows users to create workflows by tying jobs together - think of it as a set of directions. First, I want to upload these genetic datasets and then run them together in this one tool which aligns them with this specific algorithm and then I want to send that result to a visualization tool which creates an HTML output that tells me the score of the alignment and gives me the option to download the alignment file. If this were a workflow then I could provide the workflow with my files and it would do everything else by itself through scheduling within the job manager. All that really matters here for Learn2Mine is that you can use the output of jobs within Galaxy as inputs for other jobs. So I can upload datasets and use them in Learn2Mine tools. I can use the output of code I run or tools as my output when submitting for grading. I can perform scaling or filtering on my data and then use the new scaled/filtered version with a tool. This stream-of-consciousness description of Galaxy is probably the most watered down version I've given, but my past blog posts talk about Galaxy and, if you really want to read more, then those are there.

So how does Learn2Mine take advantage of Galaxy? The tools that I mentioned in my last post that we have built use Galaxy's interface to allow less-experienced programmers conduct algorithms without having to know all the specifics. On the right you will see the result of an XML markup of Learn2Mine's neural network tool. The very first input (at the top) allows users to input a dataset they have previously uploaded to Galaxy as the dataset to use for the algorithm. It is worth noting that even data that has been altered past the upload data portion of Galaxy can also be used here. The rest of the inputs do not rely on past jobs in Galaxy, but, rather, is an abstraction of inputs that you would normally feed into a neural network. For example, the hidden layers input. The hidden layers input takes a comma separated list of values. For each item separated by commas, there is a hidden layer. The number that is listed represents how many nodes exist for that respective layer. Concepts like this perpetuate throughout all of the built-in tools for Learn2Mine.

Alternatively, there is a section of Galaxy tools that we have built referred to as "Learning R" tools. The only jobs that can be run from those tools are "Create RStudio Account", "Get Personalized Dataset", and "Submit R Lessons to Learn2Mine". The "Create RStudio Account" tool is one that was made recently. This tool was completely masked earlier because in order to communicate from Galaxy to Learn2Mine we were forcing users to pass a unique key, that was associated with their account, around Galaxy. When users submitted their key to Galaxy in the past, we created their RStudio account behind the scenes. Until we find a way to automate the creation of an RStudio account with a Learn2Mine signup, we will have to make users use this tool if they want to use our cloud-based R IDE. The "Submit R Lessons to Learn2Mine" tool is a tool that you can run whenever you want to submit an R-based lesson to Learn2Mine for grading/badge-earning (this tool is analogous to the Submit Learn2Mine Tool Lesson tool in the Learn2Mine_Toolset section). This Submit R Lessons tool allows users to submit code/answers in Galaxy output or copy/paste their answer into a text-box - this is done because some users prefer one way and some prefer the other and it was not difficult to allow either. The "Get Personalized Dataset" tool is a tool that we hope to use more in the future. Right now it is only used for the advanced R lessons. It takes a user's information and gives them a personalized dataset for use in lessons - so no 2 users will have the same dataset on which to perform analysis and be graded on. We would like this to become the standard for all lessons.

As I mentioned in the previous paragraph, RStudio is a section of Learn2Mine in which users have to have Galaxy create their account. This is because, currently, our RStudio server is using accounts located on our Learn2Mine server in order to authenticate - so there is no current way to tie in Google authentication into that form of login. RStudio is a cloud-based IDE which allows users to go and run R code through an interpreter, or run entire files - much like R IDE's that require local installation. RStudio allows users to install any 3rd party R packages that they desire. This is especially useful for visualization tasks. Typically, we want users to come to RStudio to write their code and then submit their code/answer on Galaxy. It would be wonderful if we could somehow tie RStudio and Galaxy even further by just pointing Galaxy to a file that a user is working on for a lesson, but that is beyond the scope of this Spring semester.

Music listened to while blogging: Kanye West and Lily Allen

Capstone: The Gamification of Learn2Mine

In my previous post I mentioned Learn2Mine's gamification ideas and how it is using this approach to teach data science. The easiest way to do this is talk about each gamified component individually and talk about how they relate as I go.

Skill Trees

At the heart of Learn2Mine is the virtual portfolio (the Google App Engine side of Learn2Mine). The virtual portfolio contains many things, but I want to focus on the skill tree. This is located in the profile section of the site and is unique in that it shows the individual progress of a user. When you first go to the site you will have a skill tree that is all gray except for the root of the tree. At the root is a basic intro to data science badge which you get for just joining the site - a slight motivation in order to start you off on your badge-earning journey. The badge directly under the root of the tree is currently an "Uploading" badge. The uploading badge is being scrapped as we are working on a tutorial which will replace the uploading badge - so we will have a "Tutorial" badge in its place. More on the tutorial in a later post, though. So the skill tree branches off in 2 main sections as of right now - and this will definitely be expanding in the coming months. The left side of the tree is all about learning R programming (anything from basic skills, to file i/o, to writing classifiers). The right side of the tree is about using the built-in machine learning algorithms that we have inserted into the Galaxy side of Learn2Mine. A depiction of the R subtree as it remains right now can be seen below. Note that badges that are gray are unearned and badges that are colored in have been earned. Green badges are basic learned badges - typically easier lessons. Blue badges are mastery badges, a step above the green badges. Finally, the gold badges are advanced badges, which are a step up from the blue badges.

Achievements/Badges

So I just mentioned badges. You may be wondering where that came from. Well, badges are a way to incentivize users to complete lessons. These badges are, effectively, achievements for completing certain lessons. When you earn a badge it will take its place on the skill tree and, additionally, on your home page of Learn2Mine you will see a fully-flushed list of your badges. But so what? You can have badges and just show them on Learn2Mine - that's kind of boring. Well, we are in the early stages of building a search feature so you can compare yourself with your friends and try to out-compete them, but, more importantly, we have integrated our badges with Mozilla Open Badges. Mozilla Open Badges is an online standard to recognize and verify learning. You are able to show your badges off on LinkedIn, or put them on a resume. Really, you can do anything with them. What gives them credibility, though, is the JSON that backs the badge images. It is there that proof exists of when you earned the badge, where you earned the badge (for now this is always College of Charleston and Learn2Mine), and all other metadata that would be useful to have. So this has much more credibility than all your friends endorsing you for skills in LinkedIn that you may not even possess. Eventually, we want other institutions and teachers to use Learn2Mine to create their own lessons, so there would be new institutions backing certain badges and it would really just help the site flourish and help in resume processes.

Leaderboards

The leaderboards we have built into Learn2Mine are very primitive as of right now. Currently, we have leaderboards for 3 of the built-in Learn2Mine tool lessons - k-Nearest Neighbors, Neural Networks, and Partial Least Squares Regression. The idea is that users will compete against each other to get better scores on certain lessons. For example, the k-Nearest Neighbors lesson judges a user's score by the amount of classification they get correct when using our pre-built test set. This is something that desperately needs re-working but is not of as much importance as the rest of the site. A reason that re-working has to be done is that if someone is smart enough with R programming, then they can currently manipulate output to get 100% of the test set correct, though that would be a case of either cheating or overfitting. Our k-Nearest Neighbor leaderboard can be seen below (and it can be seen that it really hasn't been used):

Game Over? Instant Feedback

When you submit a lesson to Learn2Mine through Galaxy, you are able to submit as many times as you want. Let's take the standard R lesson for this example. That lesson has 12 questions that you have to answer with R output. On a quiz in a class if you missed 4/12 questions then you would end up with a 66, assuming equal weight, but, on Learn2Mine, we just let you know that you missed whatever 4 questions you missed and then allow you to retry it. As many times as you want. We let you know exactly what you missed and, in certain cases, we may even provide hints for you to take in to mind when coding up your answers.

Music listened to while blogging: Childish Gambino and Tech N9ne

Skill Trees

At the heart of Learn2Mine is the virtual portfolio (the Google App Engine side of Learn2Mine). The virtual portfolio contains many things, but I want to focus on the skill tree. This is located in the profile section of the site and is unique in that it shows the individual progress of a user. When you first go to the site you will have a skill tree that is all gray except for the root of the tree. At the root is a basic intro to data science badge which you get for just joining the site - a slight motivation in order to start you off on your badge-earning journey. The badge directly under the root of the tree is currently an "Uploading" badge. The uploading badge is being scrapped as we are working on a tutorial which will replace the uploading badge - so we will have a "Tutorial" badge in its place. More on the tutorial in a later post, though. So the skill tree branches off in 2 main sections as of right now - and this will definitely be expanding in the coming months. The left side of the tree is all about learning R programming (anything from basic skills, to file i/o, to writing classifiers). The right side of the tree is about using the built-in machine learning algorithms that we have inserted into the Galaxy side of Learn2Mine. A depiction of the R subtree as it remains right now can be seen below. Note that badges that are gray are unearned and badges that are colored in have been earned. Green badges are basic learned badges - typically easier lessons. Blue badges are mastery badges, a step above the green badges. Finally, the gold badges are advanced badges, which are a step up from the blue badges.

Achievements/Badges

So I just mentioned badges. You may be wondering where that came from. Well, badges are a way to incentivize users to complete lessons. These badges are, effectively, achievements for completing certain lessons. When you earn a badge it will take its place on the skill tree and, additionally, on your home page of Learn2Mine you will see a fully-flushed list of your badges. But so what? You can have badges and just show them on Learn2Mine - that's kind of boring. Well, we are in the early stages of building a search feature so you can compare yourself with your friends and try to out-compete them, but, more importantly, we have integrated our badges with Mozilla Open Badges. Mozilla Open Badges is an online standard to recognize and verify learning. You are able to show your badges off on LinkedIn, or put them on a resume. Really, you can do anything with them. What gives them credibility, though, is the JSON that backs the badge images. It is there that proof exists of when you earned the badge, where you earned the badge (for now this is always College of Charleston and Learn2Mine), and all other metadata that would be useful to have. So this has much more credibility than all your friends endorsing you for skills in LinkedIn that you may not even possess. Eventually, we want other institutions and teachers to use Learn2Mine to create their own lessons, so there would be new institutions backing certain badges and it would really just help the site flourish and help in resume processes.

Leaderboards

The leaderboards we have built into Learn2Mine are very primitive as of right now. Currently, we have leaderboards for 3 of the built-in Learn2Mine tool lessons - k-Nearest Neighbors, Neural Networks, and Partial Least Squares Regression. The idea is that users will compete against each other to get better scores on certain lessons. For example, the k-Nearest Neighbors lesson judges a user's score by the amount of classification they get correct when using our pre-built test set. This is something that desperately needs re-working but is not of as much importance as the rest of the site. A reason that re-working has to be done is that if someone is smart enough with R programming, then they can currently manipulate output to get 100% of the test set correct, though that would be a case of either cheating or overfitting. Our k-Nearest Neighbor leaderboard can be seen below (and it can be seen that it really hasn't been used):

Game Over? Instant Feedback

When you submit a lesson to Learn2Mine through Galaxy, you are able to submit as many times as you want. Let's take the standard R lesson for this example. That lesson has 12 questions that you have to answer with R output. On a quiz in a class if you missed 4/12 questions then you would end up with a 66, assuming equal weight, but, on Learn2Mine, we just let you know that you missed whatever 4 questions you missed and then allow you to retry it. As many times as you want. We let you know exactly what you missed and, in certain cases, we may even provide hints for you to take in to mind when coding up your answers.

Music listened to while blogging: Childish Gambino and Tech N9ne

Capstone: Motivations for Learn2Mine & Related Works

For this post I will be going over the motivations for the creation of Learn2Mine and some related works. I touched on related works last time, but I'll expand that list and give a clearer vision of what Learn2Mine is aiming to do.

For starters, there is not an effective interactive site to learn data science and to perform data science algorithms all in one place. Many people have used programs, such as Weka or RapidMiner, in the past to conduct algorithms and take results away for their own use, but these results are often confounded and require a large amount of computer science expertise to use and understand. Weka's outputs do not contain much information and is not meaningful unless you are an expert at the algorithm in which you are conducting. RapidMiner has a confusing workflow interface that may confuse new users, leading to a very steep learning curve for the software. Both of these informatics platforms, though, do not bother teaching users about the algorithms or how they work - they merely give a basic introduction as to how to use the software. It is in the name, RapidMiner - rapid mine. It really is just used to mine information. The name of my software, however, is Learn2Mine - you can learn to mine data, but you can just strictly mine if you want. The options are open and that is one of the crucial aspects of Learn2Mine - freedom of usability and pedagogical ability.

A lot of programs, though, are pretty effective at actually teaching concepts to students. I used Rosalind in the past to actually learn Bioinformatics concepts and apply my programming knowledge to actually conducting and performing basic bioinformatics algorithms. It is an effective program, but it has pigeonholed itself to only catering to computer scientists whom have a specialized interest in biology. Learn2Mine aims to take this idea and expand it to any and all domains. This has been pioneered to a very small extent. There currently exists 3 case study lessons where students have to fill in missing code in order to finish problems relating to algal bloom classification, stock market investments, and fraudulent transactions. This will be expanded in the coming months as lessons will be rolled out for bioinformatics, artificial intelligence, and data mining. The bioinformatics lessons are listed because it is a specialization that I have adopted at the College of Charleston by taking multiple bioinformatics classes and by having my data science concentration be in molecular biology. The artificial intelligence and data mining lessons will be included as there are classes at the College of Charleston which will utilize those lessons toward the end of the semester, as a way to evaluate students.

Learn2Mine has other parts about it that stand out from other programs. It is not just about being able to learn and perform. Learn2Mine takes the next step and is a completely cloud-based technology. You need not worry about having to install Learn2Mine on any machine or any kind of dependency. If you want to submit a lesson at the library and then do one at home, then you are free to do that because of our cloud-based nature. Below is an image created that shows everything that goes into Learn2Mine:

So Learn2Mine can teach data science and perform related algorithms, but what is going to keep people motivated to use Learn2Mine? Interdisciplinary fields need new ways to approach their teaching. Learn2Mine has coupled its development with gamification. Gamification, not to be confused with "edutainment" which is a video game with a bonus educational goal (e.g. You beat the boss, here's a fact about programming languages), is the manifestation of a lesson that a student completes with motivations stemming from techniques that are inspired by video games. In Learn2Mine, the techniques used are currently: the implementation of skill trees, leaderboards, and achievements (in the form of badges). My next post will focus on the gamification elements of Learn2Mine and how they have been implemented and what is next to implement.

Music listened to while blogging: Ghostland Observatory and Nine Inch Nails

For starters, there is not an effective interactive site to learn data science and to perform data science algorithms all in one place. Many people have used programs, such as Weka or RapidMiner, in the past to conduct algorithms and take results away for their own use, but these results are often confounded and require a large amount of computer science expertise to use and understand. Weka's outputs do not contain much information and is not meaningful unless you are an expert at the algorithm in which you are conducting. RapidMiner has a confusing workflow interface that may confuse new users, leading to a very steep learning curve for the software. Both of these informatics platforms, though, do not bother teaching users about the algorithms or how they work - they merely give a basic introduction as to how to use the software. It is in the name, RapidMiner - rapid mine. It really is just used to mine information. The name of my software, however, is Learn2Mine - you can learn to mine data, but you can just strictly mine if you want. The options are open and that is one of the crucial aspects of Learn2Mine - freedom of usability and pedagogical ability.

A lot of programs, though, are pretty effective at actually teaching concepts to students. I used Rosalind in the past to actually learn Bioinformatics concepts and apply my programming knowledge to actually conducting and performing basic bioinformatics algorithms. It is an effective program, but it has pigeonholed itself to only catering to computer scientists whom have a specialized interest in biology. Learn2Mine aims to take this idea and expand it to any and all domains. This has been pioneered to a very small extent. There currently exists 3 case study lessons where students have to fill in missing code in order to finish problems relating to algal bloom classification, stock market investments, and fraudulent transactions. This will be expanded in the coming months as lessons will be rolled out for bioinformatics, artificial intelligence, and data mining. The bioinformatics lessons are listed because it is a specialization that I have adopted at the College of Charleston by taking multiple bioinformatics classes and by having my data science concentration be in molecular biology. The artificial intelligence and data mining lessons will be included as there are classes at the College of Charleston which will utilize those lessons toward the end of the semester, as a way to evaluate students.

Learn2Mine has other parts about it that stand out from other programs. It is not just about being able to learn and perform. Learn2Mine takes the next step and is a completely cloud-based technology. You need not worry about having to install Learn2Mine on any machine or any kind of dependency. If you want to submit a lesson at the library and then do one at home, then you are free to do that because of our cloud-based nature. Below is an image created that shows everything that goes into Learn2Mine:

So Learn2Mine can teach data science and perform related algorithms, but what is going to keep people motivated to use Learn2Mine? Interdisciplinary fields need new ways to approach their teaching. Learn2Mine has coupled its development with gamification. Gamification, not to be confused with "edutainment" which is a video game with a bonus educational goal (e.g. You beat the boss, here's a fact about programming languages), is the manifestation of a lesson that a student completes with motivations stemming from techniques that are inspired by video games. In Learn2Mine, the techniques used are currently: the implementation of skill trees, leaderboards, and achievements (in the form of badges). My next post will focus on the gamification elements of Learn2Mine and how they have been implemented and what is next to implement.

Music listened to while blogging: Ghostland Observatory and Nine Inch Nails

Monday, February 24, 2014

Capstone: Introduction to Learn2Mine

I'd like to open this post by making a note about the state of my blog over the next several weeks. For my capstone, I am required to keep up with a blog and create posts specifically about work on my research and capstone paper itself. Any post prefaced with "Capstone:" will be in direct reference to that. So for anyone who wants to skip over those readings can just skip on by them and those that are interested can read them if so wish. This is mainly to have a compiled listing of my works through software engineering and my works through my own research in one place rather than managing multiple blogs.

So my project is Learn2Mine. But before I even tell you what that is about, you need to have some prerequisite knowledge, or at least an inkling of an idea about certain topics. So let's get to it.

Data Science is the first of these topics. Data science is an interdisciplinary field which crosses the realms of Statistics, Computer Science, and a domain field (e.g. Biology, Business, Geology, etc.). To the right is a very popular image which really highlights the cross-discipline nature of Data Science.

Data Science is the first of these topics. Data science is an interdisciplinary field which crosses the realms of Statistics, Computer Science, and a domain field (e.g. Biology, Business, Geology, etc.). To the right is a very popular image which really highlights the cross-discipline nature of Data Science.

Data science is not taught to its fullest nowadays, though. People that are data scientists tend to be primarily a mathematician (i.e. statistician), a computer scientist, or someone with substantive expertise in a scientific or business-related field (see Best Source of New Data Science Talent below). If you have traditional training in one of these fields you tend to try and self-teach yourself the important skills of other fields. So maybe a biologist will try to learn the algorithms (e.g. Smith-waterman algorithm) that conduct the alignment of nucleotides or amino acids in gene sequences. Being able to use these algorithms at the most primal of levels without really understanding how to tweak them or really know what is going on means that the computer science expertise that you have is not enough to really mold you into a data scientist - or at least what we would like data scientists to be. A better representation of this can be seen in the image below.

Data science is not taught to its fullest nowadays, though. People that are data scientists tend to be primarily a mathematician (i.e. statistician), a computer scientist, or someone with substantive expertise in a scientific or business-related field (see Best Source of New Data Science Talent below). If you have traditional training in one of these fields you tend to try and self-teach yourself the important skills of other fields. So maybe a biologist will try to learn the algorithms (e.g. Smith-waterman algorithm) that conduct the alignment of nucleotides or amino acids in gene sequences. Being able to use these algorithms at the most primal of levels without really understanding how to tweak them or really know what is going on means that the computer science expertise that you have is not enough to really mold you into a data scientist - or at least what we would like data scientists to be. A better representation of this can be seen in the image below.

So what are we going to do? How do we make sure that the influx of data scientists that we desperately need in academia and industry can get the proper training? The answer to that question is one that has been under development for quite some time now: Learn2Mine. You may be wondering what Learn2Mine is or how it is going to achieve this incredible goal. Well, you may have heard of sites like Codecademy, Rosalind, and/or O'Reilly. Soon Learn2Mine will be among this list as the preferred source for students and scientists alike to learn and master the skills and techniques one knows as a data scientist.

So my project is Learn2Mine. But before I even tell you what that is about, you need to have some prerequisite knowledge, or at least an inkling of an idea about certain topics. So let's get to it.

Data Science is the first of these topics. Data science is an interdisciplinary field which crosses the realms of Statistics, Computer Science, and a domain field (e.g. Biology, Business, Geology, etc.). To the right is a very popular image which really highlights the cross-discipline nature of Data Science.

Data Science is the first of these topics. Data science is an interdisciplinary field which crosses the realms of Statistics, Computer Science, and a domain field (e.g. Biology, Business, Geology, etc.). To the right is a very popular image which really highlights the cross-discipline nature of Data Science. Data science is not taught to its fullest nowadays, though. People that are data scientists tend to be primarily a mathematician (i.e. statistician), a computer scientist, or someone with substantive expertise in a scientific or business-related field (see Best Source of New Data Science Talent below). If you have traditional training in one of these fields you tend to try and self-teach yourself the important skills of other fields. So maybe a biologist will try to learn the algorithms (e.g. Smith-waterman algorithm) that conduct the alignment of nucleotides or amino acids in gene sequences. Being able to use these algorithms at the most primal of levels without really understanding how to tweak them or really know what is going on means that the computer science expertise that you have is not enough to really mold you into a data scientist - or at least what we would like data scientists to be. A better representation of this can be seen in the image below.

Data science is not taught to its fullest nowadays, though. People that are data scientists tend to be primarily a mathematician (i.e. statistician), a computer scientist, or someone with substantive expertise in a scientific or business-related field (see Best Source of New Data Science Talent below). If you have traditional training in one of these fields you tend to try and self-teach yourself the important skills of other fields. So maybe a biologist will try to learn the algorithms (e.g. Smith-waterman algorithm) that conduct the alignment of nucleotides or amino acids in gene sequences. Being able to use these algorithms at the most primal of levels without really understanding how to tweak them or really know what is going on means that the computer science expertise that you have is not enough to really mold you into a data scientist - or at least what we would like data scientists to be. A better representation of this can be seen in the image below.So what are we going to do? How do we make sure that the influx of data scientists that we desperately need in academia and industry can get the proper training? The answer to that question is one that has been under development for quite some time now: Learn2Mine. You may be wondering what Learn2Mine is or how it is going to achieve this incredible goal. Well, you may have heard of sites like Codecademy, Rosalind, and/or O'Reilly. Soon Learn2Mine will be among this list as the preferred source for students and scientists alike to learn and master the skills and techniques one knows as a data scientist.

Progress Reflections: Feature Addition to Galaxy

In order to have something to actually talk about for this post, some work had to be done since we had already picked out a feature that we wanted to add to Galaxy: The ability to transpose data.

For a quick summary, here is the link to the pull request: https://bitbucket.org/galaxy/galaxy-central/pull-request/335/added-transpose-tool/diff

So let's go over the files mentioned in the diff of the pull request and see what is actually going on:

tools/filters/transpose.py

Now the file itself has a lot of bulkiness located in it. This is for handling Galaxy's standards for error handling and function calling. They prefer the creation of a main method and the calling of it through an "if __name__" call.

The code located on the left is the actual conduction of the data transposing. The files are assumed to be tab-delimited (we could even fix this "bug" later by improving the tool). The user need only input a file through Galaxy's interface and this tool can be ran on the data.

Now, you may be wondering "What if someone does not use tab-delimited data?" or "How do people know that the data is supposed to be tab-delimited?"

This is all answered in the XML file:

tools/filters/transpose.xml

Now the entirety of the XML file is important for feature addition in Galaxy.

Line 1 of this file specifies the tool id (just a unique identifier - does not get referenced anywhere else), the name of the tool (a name you want users to recognize the tool by in Galaxy's interface), and a version for the tool (since it is new, 1.0.0). This line is, finally, closed on the last line of the file by simple XML markups.

Line 2 of the XML calls for a description of the tool. This is appended (with a preceding space) to the tool name in Galaxy's interface to give an extremely brief description of what the tool does and from where. So here we just say you can transpose data from a file (as opposed to the inputting of data, manually).

The next few lines (not required to be a specified length in Galaxy's specifications) call for the command interpretation and the actual command line call Galaxy will be making. Galaxy supports all kinds of interpreters for scripting (perl and python are the only ones that come to mind). So here, since we are using python, we use "python" as the interpreter argument and then enclose our command. The first argument of the command is the python file itself. After this we have identifiers (signified by $) to inputs later specified in the XML markup - input and output, which just reference files.

So let's talk about those files since they are in the next 2 sections (inputs and outputs). We have one input. The arguments utilized here are "format", "name", "type", and "label". The name identifier is what references back to the command line call. Format is an optional argument specified for the type="data" that restricts users from using inappropriate arguments. So the tool, as it stands, only works on tabular (or tab-delimited) data. Lastly, there is a label argument. In the gui representation of the tool, the label will precede the placement of the argument.

Lastly, Galaxy has a help markup for their XML files. The first specification within this help section is a reference to a tool within Galaxy that can convert data to being tab-delimited. Essentially, this generalizes the tool by allowing any form of delimited data to be used as data can be converted to tab-delimited and then converted back. While tedious, users can create workflows that will conduct this task for them, if so desired. Next in the help section, there is an example. Just in case a user is unsure of what transposing actually does to their data, there is a simple markup that shows a before/after transposition on a small piece of data.

tool_conf.xml.main

The next specification made is updating the tool_conf.xml file. At first this was puzzling because the stable user-version of the Galaxy distribution uses tool_conf.xml as the file that is read, but it appears that Galaxy appends a ".main" in the developer version of Galaxy. So this was the file in which information was added. Effectively, all that was done here was reference the xml file of the transpose tool (and the xml file references the python file which is physically run) within the text manipulation component section. This allows the transpose tool to be seen for usage (an image of this can be seen below the tool_conf image).

The next specification made is updating the tool_conf.xml file. At first this was puzzling because the stable user-version of the Galaxy distribution uses tool_conf.xml as the file that is read, but it appears that Galaxy appends a ".main" in the developer version of Galaxy. So this was the file in which information was added. Effectively, all that was done here was reference the xml file of the transpose tool (and the xml file references the python file which is physically run) within the text manipulation component section. This allows the transpose tool to be seen for usage (an image of this can be seen below the tool_conf image).

test-data/transpose_in1.tabular

Now here is where we provide information about those test tags in the XML that you may have noticed that I skipped talking about earlier. Galaxy has a built-in function that mines the XML files for running functional and unit tests - effective for making sure crazy bugs are not

test-data/transpose_out1.tabular

induced between builds and versions of Galaxy. Here, one test is written just to show how the transposing of the matrix works (this is the same as the example used in the help section of the XML). So this first file is the input that the functional test takes. A diff is then computed against the output file that has been provided. If they are different, then the test fails. If they are exactly the same, then the test passes - simple as that.

induced between builds and versions of Galaxy. Here, one test is written just to show how the transposing of the matrix works (this is the same as the example used in the help section of the XML). So this first file is the input that the functional test takes. A diff is then computed against the output file that has been provided. If they are different, then the test fails. If they are exactly the same, then the test passes - simple as that.

And that's the story of my second pull request to Galaxy.

Music listened to while blogging: Ellie Goulding

For a quick summary, here is the link to the pull request: https://bitbucket.org/galaxy/galaxy-central/pull-request/335/added-transpose-tool/diff

So let's go over the files mentioned in the diff of the pull request and see what is actually going on:

tools/filters/transpose.py

Now the file itself has a lot of bulkiness located in it. This is for handling Galaxy's standards for error handling and function calling. They prefer the creation of a main method and the calling of it through an "if __name__" call.

The code located on the left is the actual conduction of the data transposing. The files are assumed to be tab-delimited (we could even fix this "bug" later by improving the tool). The user need only input a file through Galaxy's interface and this tool can be ran on the data.

Now, you may be wondering "What if someone does not use tab-delimited data?" or "How do people know that the data is supposed to be tab-delimited?"

This is all answered in the XML file:

tools/filters/transpose.xml

Now the entirety of the XML file is important for feature addition in Galaxy.

Line 1 of this file specifies the tool id (just a unique identifier - does not get referenced anywhere else), the name of the tool (a name you want users to recognize the tool by in Galaxy's interface), and a version for the tool (since it is new, 1.0.0). This line is, finally, closed on the last line of the file by simple XML markups.

Line 2 of the XML calls for a description of the tool. This is appended (with a preceding space) to the tool name in Galaxy's interface to give an extremely brief description of what the tool does and from where. So here we just say you can transpose data from a file (as opposed to the inputting of data, manually).

The next few lines (not required to be a specified length in Galaxy's specifications) call for the command interpretation and the actual command line call Galaxy will be making. Galaxy supports all kinds of interpreters for scripting (perl and python are the only ones that come to mind). So here, since we are using python, we use "python" as the interpreter argument and then enclose our command. The first argument of the command is the python file itself. After this we have identifiers (signified by $) to inputs later specified in the XML markup - input and output, which just reference files.

So let's talk about those files since they are in the next 2 sections (inputs and outputs). We have one input. The arguments utilized here are "format", "name", "type", and "label". The name identifier is what references back to the command line call. Format is an optional argument specified for the type="data" that restricts users from using inappropriate arguments. So the tool, as it stands, only works on tabular (or tab-delimited) data. Lastly, there is a label argument. In the gui representation of the tool, the label will precede the placement of the argument.

Lastly, Galaxy has a help markup for their XML files. The first specification within this help section is a reference to a tool within Galaxy that can convert data to being tab-delimited. Essentially, this generalizes the tool by allowing any form of delimited data to be used as data can be converted to tab-delimited and then converted back. While tedious, users can create workflows that will conduct this task for them, if so desired. Next in the help section, there is an example. Just in case a user is unsure of what transposing actually does to their data, there is a simple markup that shows a before/after transposition on a small piece of data.

tool_conf.xml.main

The next specification made is updating the tool_conf.xml file. At first this was puzzling because the stable user-version of the Galaxy distribution uses tool_conf.xml as the file that is read, but it appears that Galaxy appends a ".main" in the developer version of Galaxy. So this was the file in which information was added. Effectively, all that was done here was reference the xml file of the transpose tool (and the xml file references the python file which is physically run) within the text manipulation component section. This allows the transpose tool to be seen for usage (an image of this can be seen below the tool_conf image).

The next specification made is updating the tool_conf.xml file. At first this was puzzling because the stable user-version of the Galaxy distribution uses tool_conf.xml as the file that is read, but it appears that Galaxy appends a ".main" in the developer version of Galaxy. So this was the file in which information was added. Effectively, all that was done here was reference the xml file of the transpose tool (and the xml file references the python file which is physically run) within the text manipulation component section. This allows the transpose tool to be seen for usage (an image of this can be seen below the tool_conf image).test-data/transpose_in1.tabular

Now here is where we provide information about those test tags in the XML that you may have noticed that I skipped talking about earlier. Galaxy has a built-in function that mines the XML files for running functional and unit tests - effective for making sure crazy bugs are not

test-data/transpose_out1.tabular

induced between builds and versions of Galaxy. Here, one test is written just to show how the transposing of the matrix works (this is the same as the example used in the help section of the XML). So this first file is the input that the functional test takes. A diff is then computed against the output file that has been provided. If they are different, then the test fails. If they are exactly the same, then the test passes - simple as that.

induced between builds and versions of Galaxy. Here, one test is written just to show how the transposing of the matrix works (this is the same as the example used in the help section of the XML). So this first file is the input that the functional test takes. A diff is then computed against the output file that has been provided. If they are different, then the test fails. If they are exactly the same, then the test passes - simple as that.And that's the story of my second pull request to Galaxy.

Music listened to while blogging: Ellie Goulding

Wednesday, February 19, 2014

Refactoring Mindset

This next post will be reflecting upon chapter 4 and segments of chapter 5 of Software Development: An Open Source Approach and talking about the next addition that Team Rocket will be adding to Galaxy.

Chapter 4 of Software Development is one that I skimmed over pretty quickly. The reasoning for thsi is that the chapter is on Software Architecture. A lot of people reading this do not have software architecture experience, but I have gone through an entire class where we studied software architecture and design. We used various design patterns in order to set up a project. The project can be viewed here. There are a few topics covered in the chapter that we did not focus largely on. For example, the section entitled concurrency, race conditions, and deadlocks is something that we did not focus on too much. Effectively, these are synchronization issues that can be difficult to debug. Concurrency is an issue best handles by using randomly generated keys or sessions in order to keep track of who is who when using software or an application. A race condition is a very dangerous flaw to have in software. If two users are trying to access a system and input data where there is only room for one user's data, then a race condition can occur. The two transactions will occur simultaneously but the outcome is completely unpredictable because the order between the two transactions is, most likely, very unknown. Lastly, there is the concept of a deadlock. A deadlock can be thought of as antonymous to race conditions. With race conditions, both users are able to complete their task (or at least think they completed it) where a deadlock has no users able to complete their task. If two users try to simultaneously access a record in a table of a database then neither may be able to access the record due to a lack of forethought on the programmers' end. Using locking can prevent these issues - effectively a programmer just wants to flag and tell the software "Hey, I'm accessing this, so if anyone else tries, let them know so they don't cause a race condition or lock us both out - then when I've relinquished control, they can jump in."

Chapter 5 largely focuses on what you can do with code from an open source project. The sections I'm particularly focusing on for this discussion are Debugging and Extending the software for a new project. These sections are just a recapitulation of the work that has been done with Teaching Open Source. This section dives into talking about bugs and how their natures can be very different. For example, a bug could just be a lack of implementation in the user interface or maybe be a bug in that it imposes an unintentional race condition that has destructive results, to tie back to chapter 4. Next, the chapter talks about project extinsibility. A plethora of open source projects have a primary usage that people love that piece of software for, but there are also users of these open source projects that see them with a very different vision. In my own research we have adapted the open source project Galaxy to produce a use different than the vision of the core developers of Galaxy, but it is strongly encouraged. We use Galaxy as a launcher for machine learning algorithms, grading of students' coding, and as a general communication tool between the branches of our project, Learn2Mine. Open source communities love having their projects extended and for good reason. Some bugs and features do not become apparent until someone uses their software for a purpose other than the original intention, for starters. Also, who wouldn't want their software being adopted by other people? That really is just a testament to how good of a job you did creating your software in the first place.

To close my talking about Software Development: An Open Source Approach, I will talk about my experience with working with RMH Homebase, first referenced in blog posts back in January 2014, while considering the aforementioned topics. Effectively, what I've done here is refactored code in RMH Homebase. There was a piece of ugly code which was repeated several times throughout RMH Homebase's codebase. Fixing this only required making a function in which the same computation was performed, but was only written once. This is so the function can just be called in each of these instances. You might be asking "Why do all that extra work if it already works? We know what that code does and it's fine?" Well, what if you want to change the code at some point? Will you remember to do it each time? Why take a risk and not refactor when you can refactor the code, make it look cleaner, and save yourself heavy-lifting later when the code starts to degrade and needs to be improved.

Finally, I'd like to make a mention of my team's latest discovery in what we want to work on next for Galaxy:

We will be working on a feature which has been requested here on Trello. A full description can be seen below:

Title: 633: New text manipulation tool: transpose matrix

Description:

Function: Transpose a matrix tabular infile of columns/rows.

The Python command would be (from Ross, 4/15/11):

you can transpose a matrix stored as a python list of lists with

t = map(None,*listoflists)

Reported by: Jennifer Jackson

So this feature implementation will allow Galaxy users to transpose data that they have uploaded to the Galaxy system.

Music listened to while blogging: Tech N9ne

Chapter 4 of Software Development is one that I skimmed over pretty quickly. The reasoning for thsi is that the chapter is on Software Architecture. A lot of people reading this do not have software architecture experience, but I have gone through an entire class where we studied software architecture and design. We used various design patterns in order to set up a project. The project can be viewed here. There are a few topics covered in the chapter that we did not focus largely on. For example, the section entitled concurrency, race conditions, and deadlocks is something that we did not focus on too much. Effectively, these are synchronization issues that can be difficult to debug. Concurrency is an issue best handles by using randomly generated keys or sessions in order to keep track of who is who when using software or an application. A race condition is a very dangerous flaw to have in software. If two users are trying to access a system and input data where there is only room for one user's data, then a race condition can occur. The two transactions will occur simultaneously but the outcome is completely unpredictable because the order between the two transactions is, most likely, very unknown. Lastly, there is the concept of a deadlock. A deadlock can be thought of as antonymous to race conditions. With race conditions, both users are able to complete their task (or at least think they completed it) where a deadlock has no users able to complete their task. If two users try to simultaneously access a record in a table of a database then neither may be able to access the record due to a lack of forethought on the programmers' end. Using locking can prevent these issues - effectively a programmer just wants to flag and tell the software "Hey, I'm accessing this, so if anyone else tries, let them know so they don't cause a race condition or lock us both out - then when I've relinquished control, they can jump in."

Chapter 5 largely focuses on what you can do with code from an open source project. The sections I'm particularly focusing on for this discussion are Debugging and Extending the software for a new project. These sections are just a recapitulation of the work that has been done with Teaching Open Source. This section dives into talking about bugs and how their natures can be very different. For example, a bug could just be a lack of implementation in the user interface or maybe be a bug in that it imposes an unintentional race condition that has destructive results, to tie back to chapter 4. Next, the chapter talks about project extinsibility. A plethora of open source projects have a primary usage that people love that piece of software for, but there are also users of these open source projects that see them with a very different vision. In my own research we have adapted the open source project Galaxy to produce a use different than the vision of the core developers of Galaxy, but it is strongly encouraged. We use Galaxy as a launcher for machine learning algorithms, grading of students' coding, and as a general communication tool between the branches of our project, Learn2Mine. Open source communities love having their projects extended and for good reason. Some bugs and features do not become apparent until someone uses their software for a purpose other than the original intention, for starters. Also, who wouldn't want their software being adopted by other people? That really is just a testament to how good of a job you did creating your software in the first place.

To close my talking about Software Development: An Open Source Approach, I will talk about my experience with working with RMH Homebase, first referenced in blog posts back in January 2014, while considering the aforementioned topics. Effectively, what I've done here is refactored code in RMH Homebase. There was a piece of ugly code which was repeated several times throughout RMH Homebase's codebase. Fixing this only required making a function in which the same computation was performed, but was only written once. This is so the function can just be called in each of these instances. You might be asking "Why do all that extra work if it already works? We know what that code does and it's fine?" Well, what if you want to change the code at some point? Will you remember to do it each time? Why take a risk and not refactor when you can refactor the code, make it look cleaner, and save yourself heavy-lifting later when the code starts to degrade and needs to be improved.

Finally, I'd like to make a mention of my team's latest discovery in what we want to work on next for Galaxy:

We will be working on a feature which has been requested here on Trello. A full description can be seen below:

Title: 633: New text manipulation tool: transpose matrix

Description:

Function: Transpose a matrix tabular infile of columns/rows.

The Python command would be (from Ross, 4/15/11):

you can transpose a matrix stored as a python list of lists with

t = map(None,*listoflists)

Reported by: Jennifer Jackson

So this feature implementation will allow Galaxy users to transpose data that they have uploaded to the Galaxy system.

Music listened to while blogging: Tech N9ne

Monday, February 17, 2014

Galaxy Pull Request #322 Update

This post comes with great news!

The pull request that I submitted has been reviewed once by one of the Galaxy core developers, John Chilton. Our brief conversation can be seen below:

As you can see from my comment, the fix was updated the same day. Below you can see diffs of the 2 files that were changed (and then updated).

Effectively, the versioning in the XML is so that when the next Galaxy update is rolled out there will be no compatibility issues. This is because in Galaxy a user can create workflows. These workflows are merely culminations of tools that feed input and output to each other in order to produce a final end result (or results). If someone updates their Galaxy, they will essentially be notified that their workflow will not work anymore unless they tweak the X tools that have changed versions.

The filename change is something I almost did initially. Galaxy preserves files in working directories. When a job (the running of a tool - analogous to a process and program) runs it can have files created that only need to exist during the duration of the job. By naming the file irrelative to the name of the inputfile that it is initially handed, Galaxy can tag this and pretty much say "Alright, when we're done, this is getting thrown away". We just do not want people to have clutter on machines running these tools.

So next up for Team Rocket is finding a new bug to squash, find documentation that needs to be updated/fixed, and/or find a feature request and create that feature. Personally, I would much rather go with the bug or feature as that is actually fun versus reading someone else's documentation and testing everything to make sure it works. Killing bugs and adding features actually adds more usability, especially since documentation appears alongside new/fixed code.

Music listened to while blogging: Tech N9ne

The pull request that I submitted has been reviewed once by one of the Galaxy core developers, John Chilton. Our brief conversation can be seen below:

As you can see from my comment, the fix was updated the same day. Below you can see diffs of the 2 files that were changed (and then updated).

Effectively, the versioning in the XML is so that when the next Galaxy update is rolled out there will be no compatibility issues. This is because in Galaxy a user can create workflows. These workflows are merely culminations of tools that feed input and output to each other in order to produce a final end result (or results). If someone updates their Galaxy, they will essentially be notified that their workflow will not work anymore unless they tweak the X tools that have changed versions.

The filename change is something I almost did initially. Galaxy preserves files in working directories. When a job (the running of a tool - analogous to a process and program) runs it can have files created that only need to exist during the duration of the job. By naming the file irrelative to the name of the inputfile that it is initially handed, Galaxy can tag this and pretty much say "Alright, when we're done, this is getting thrown away". We just do not want people to have clutter on machines running these tools.

So next up for Team Rocket is finding a new bug to squash, find documentation that needs to be updated/fixed, and/or find a feature request and create that feature. Personally, I would much rather go with the bug or feature as that is actually fun versus reading someone else's documentation and testing everything to make sure it works. Killing bugs and adding features actually adds more usability, especially since documentation appears alongside new/fixed code.

Music listened to while blogging: Tech N9ne

Wednesday, February 12, 2014

What's Happening?

This post will be focusing on article responses - I'll break each of them down once I get to them.

IEEE Computer Society (April 2013)

Ubiquitous Analytics: Interacting with Big Data Anywhere, Anytime

Niklas Elmqvist, Purdue University & Fourange Irani, University of Manitoba

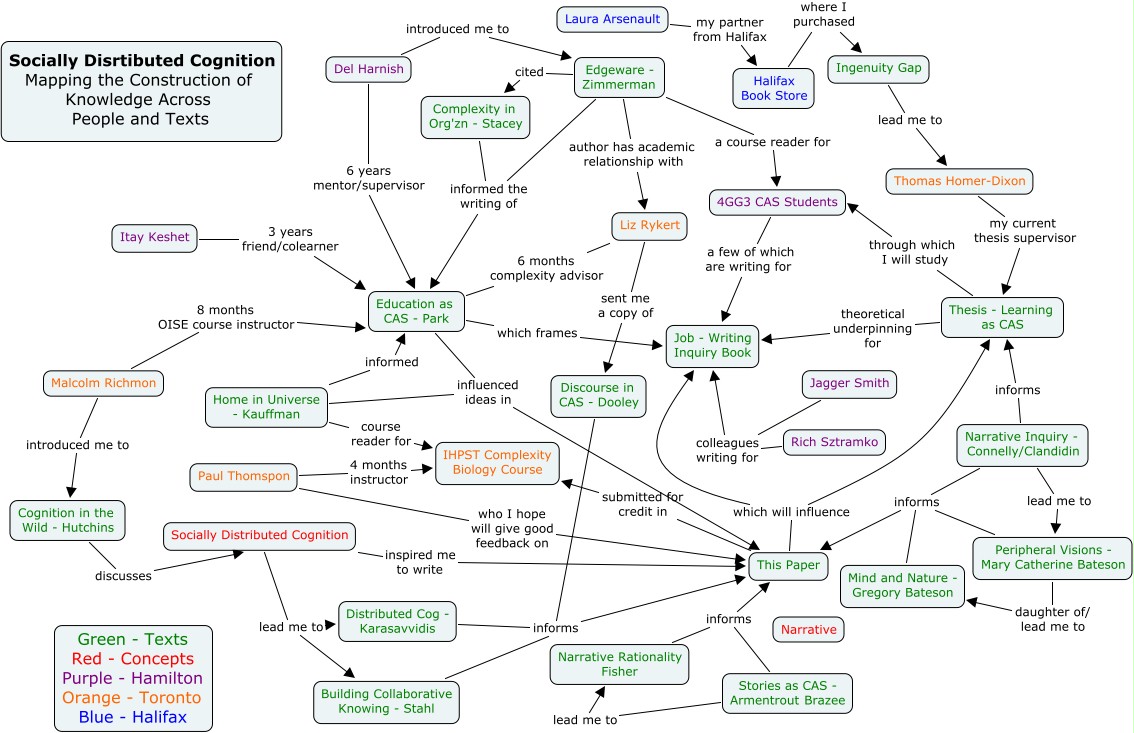

This article initially piqued my interest because of the subtitle: "Interacting with Big Data Anywhere, Anytime". Also, I really was not sure what Ubiquitous Analytics (Ubilytics) entailed so I was ready to expand my data science knowledge. "Ubilytics is the use of multiple networked devices in our local environment to enable deep and dynamic analysis of massive, heterogeneous, and multiscale data anytime." So, essentially, ubilytics will be able to couple the embodiment of socially distributed cognition models of human thought with novel, interactive technologies in order to harness new sources of big data and interactivity with that data. For reference, the picture below depicts how socially distributed cognition models of human thought can be visualized as a system-level process that involves the brain and 'sensors' into the physical area around a person, which includes other people and objects in addition to just space.

Ubilytics is important because it can serve as a very significant aid whenever considering disaster relief or controlling a pandemic. Data can be grabbed from any source (think Google Glass) and be used to determine the best course(s) of action. What makes this better is that you can bring together data from scientists who have expertise on the issue and policymakers or officials to instate the best course of action for unfortunate events. The other side to ubilytics that some people would be fearful of is the fact that someone could tap into the technological devices that normal people have. Whether for good or bad, issues Effectively, the article can be boiled down to this statement: "Ubilytics amplifies human cognition by embedding analytical processes into the physical environment to make sense of big data anywhere, anytime."

Infoworld (2/6/14)

12 predictions for the future of programming

Peter Wayner

Available here

Peter Wayner is outlining predictions for how he thinks the future of programming will proceed. It is easy to predict certain aspects of the future of programming because trends are telling. For example, the first prediction he gives is that the GPU will become the next CPU. People used to brag and brag about their awesome CPU. CPU prices have dropped and GPU's have pretty much absorbed the pricing that CPU's used to have. Additionally, personal computers tend to be measured by their ability to process graphics (e.g. shading, etc.) since a lot of computers can easily get 8-16GB of RAM for cheap and i7 CPUs are almost completely mainstream for new computers. What is setting computers apart are the ability to process graphics. A lot of this is coming from personal experience so I may feel skewed, but I agree with the author here.

The next prediction is that databases will start performing more complex analyses. I completely believe this and here's why: I believe databases will start transitioning away from a rigid layout (like you would have in MySQL) when dealing with big data. For example, the concept of the semantic web is really what will take over. Being able to query data in a rdf fashion will be the future. There exist some ways on the web to query data like this. For example, dbpedia allows the querying of wikipedia through the use of SPARQL queries. I could essentially ask "What episodes of the Simpsons have the comic book guy starring" and the SPARQL query could find that data and return it to me in a quick fashion. The nature of rdf makes more sense from a cognitive perspective and really gets away from having to worry about joining tables so you can have access to all the data you need just to do some filtering. Obviously, I am a bit biased considering the nature of my primary field of study, but I do wholeheartedly believe that this is a true prediction.

The next few predictions I cannot really take a firm stance on because I am not as well versed in the fields. Peter Wayner says Javascript will take over and I can kind of see how that may happen for all the reasons that he elicits (I also have a Chromebook that runs Chrome OS). I don't see lightweight OS's such as this becoming fully mainstream, but there is a viable market for them, especially for the utilization of cloud-based technologies. Additionally, Peter Wayner says Android will take over and essentially be involved with everything technologically. I can see this happening just as easily as I can see it failing. Integrating lightweight, mobile technologies just makes sense. The Android platforms can already be used as universal remotes. What is there to stop Android from becoming a more widely-available and universal standard?

The rest of the predictions feel more as a trend-following than bold predictions. For example, open source technologies are only going to grow as the computer science communities seem to only be amicable toward open source. Additionally, the command line will always prevail when it comes to scripting. A GUI is just a dumbed down (another topic tackled by Peter Wayner) version of the command line - and dumbed down is really just another way to say "abstracted beyond full usage" here. Lastly, there will always be a disconnect between managers and computer scientists when it comes to projects. Until we embrace computer science as a stringent requirement in schooling, it will remain this way. Even then it still will not be perfect. Overall, I enjoyed Peter Wayner's article because it really puts the programming and software engineering world in perspective with the upcoming years.

Lastly, I would like to reach back to Opensource.com for an article.

http://opensource.com/business/14/2/analyzing-contributions-to-openstack

This article is about one writer's experience whenever asking 55 contributors to openstack why they only contributed once in the past year. What reasons or lack of reasons were there and what could make them want to contribute more, if anything. The top 3 reasons that were given as to why they do not contribute more can be traced back to their primary usage of openstack. Developers of openstack were a large amount of the people that were surveyed. Another portion were people that secondarily use openstack with their own projects. Last, there was a group who deployed openstack for utilization by their own customers. So what was stopping them from contributing further? You would think that they would love to keep patching bugs to help themselves, their customers, and the community in general. Well, as aforementioned, there are 3 top reasons cited as to why they only contributed once in the past 12 months:

1) Legal hurdles - a lot of contributors had NDAs that would stop them from contributing as they would be in violation. Additionally, some companies that these people worked for had instituted corporate policies that prevented them from contributing.

2) The length of time it takes for contributions to actually be reviewed and accepted.

3) Simple bugs just are not around long enough for a contribution to be made without it taking a very long amount of time.

Now I can very much see the number 2 and number 3 hurdles (I've never been in a situation where legal blocks have impeded me) being very prevalent. In Galaxy, for example, the core developers are so active that they tend to patch smaller bugs very quickly and a lot of the bugs or feature requests that are still around are usually very large implementations or it would take reworking of core parts of the environment that the developers are unsure of how they want tackled.

Now that I've tackled all of the articles that I wanted to review, I am going to be going through some exercises out of Chapter 7 of Teaching Open Source.

The first exercise is essentially finding the difference between running a diff command on its own and diff with a "-u" flag. That flag changes the output of the diff into a unified format, which is usually the accepted standard for sending diffs to other developers. The next exercise focuses on using diff whenever submitting a patch. Well, whenever I submitted my team's patch to Bitbucket, we had to run a diff. There's a link to it in my past post's pull request. Diffs are awesome for showing changes like that. Additionally, I ran a diff from within Mercurial whenever trying to figure out how to get my branches merged together. Lastly, there was an exercise that wanted me to patch echo so that it would print arguments out in reverse order. This was a trivial exercise that really just required the use of a for-loop and knowing how to reconfigure and perform a make on the file.

Music listened to while blogging: N/A - Watching @Midnight on Comedy Central

IEEE Computer Society (April 2013)

Ubiquitous Analytics: Interacting with Big Data Anywhere, Anytime

Niklas Elmqvist, Purdue University & Fourange Irani, University of Manitoba

This article initially piqued my interest because of the subtitle: "Interacting with Big Data Anywhere, Anytime". Also, I really was not sure what Ubiquitous Analytics (Ubilytics) entailed so I was ready to expand my data science knowledge. "Ubilytics is the use of multiple networked devices in our local environment to enable deep and dynamic analysis of massive, heterogeneous, and multiscale data anytime." So, essentially, ubilytics will be able to couple the embodiment of socially distributed cognition models of human thought with novel, interactive technologies in order to harness new sources of big data and interactivity with that data. For reference, the picture below depicts how socially distributed cognition models of human thought can be visualized as a system-level process that involves the brain and 'sensors' into the physical area around a person, which includes other people and objects in addition to just space.

|

| Adapted from: http://photos1.blogger.com/blogger/6127/1888/1600/sdcog3.0.jpg |

Infoworld (2/6/14)

12 predictions for the future of programming

Peter Wayner

Available here

Peter Wayner is outlining predictions for how he thinks the future of programming will proceed. It is easy to predict certain aspects of the future of programming because trends are telling. For example, the first prediction he gives is that the GPU will become the next CPU. People used to brag and brag about their awesome CPU. CPU prices have dropped and GPU's have pretty much absorbed the pricing that CPU's used to have. Additionally, personal computers tend to be measured by their ability to process graphics (e.g. shading, etc.) since a lot of computers can easily get 8-16GB of RAM for cheap and i7 CPUs are almost completely mainstream for new computers. What is setting computers apart are the ability to process graphics. A lot of this is coming from personal experience so I may feel skewed, but I agree with the author here.